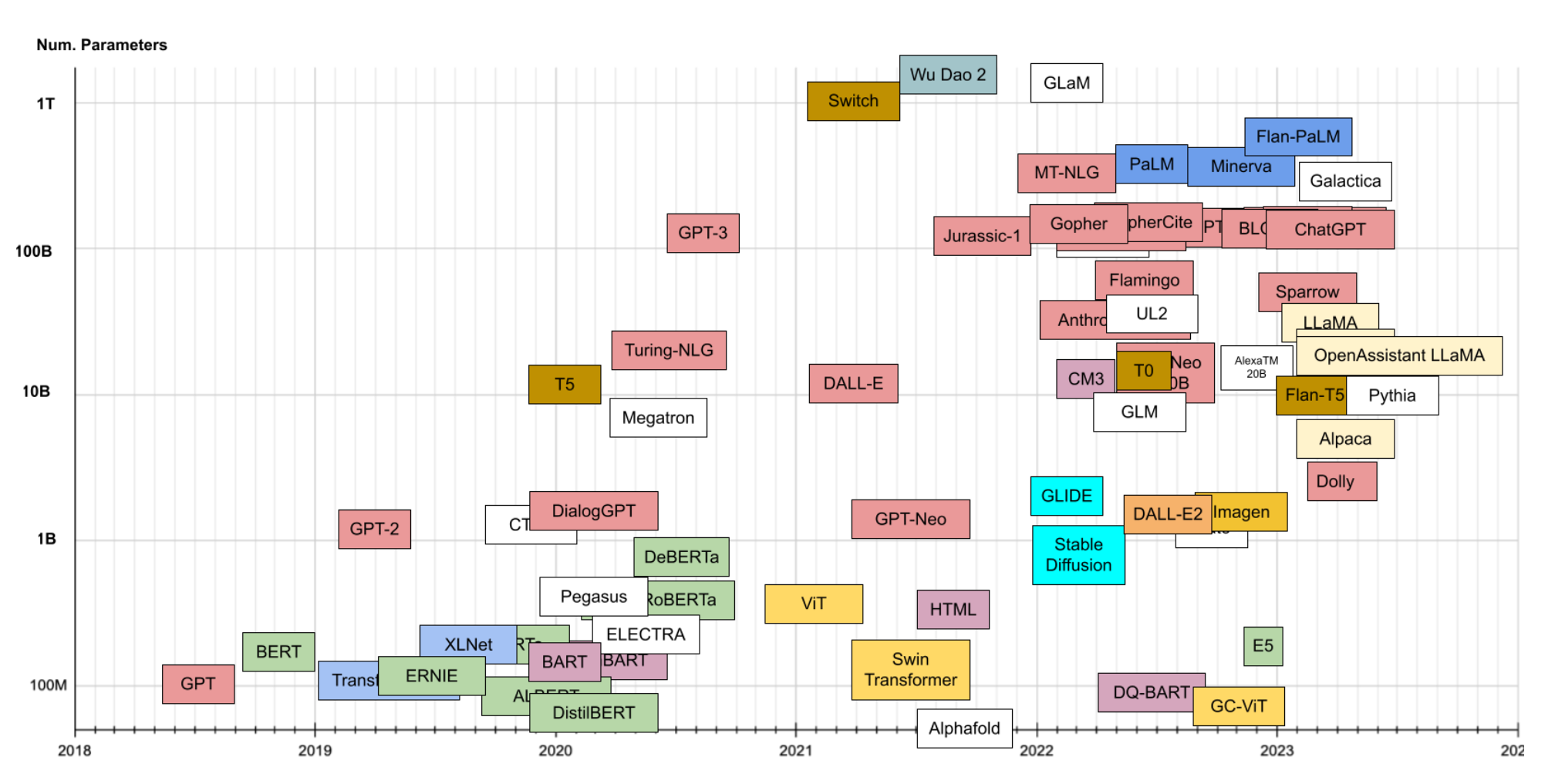

Since the last time I presented on NLP there’s been some…developments.

I updated my Bagging to BERT tutorial, mainly focusing on using SpaCy throughout. My thinking is that having all the excess PyTorch code and various types of implementations wasn’t as helpful as having a single structure that allowed for different model architectures to be used.

SpaCy is pretty awesome for allowing that kind of modularity, so I figured I’d use it as the template.

In future work, I’d like to expand this to get into the more advanced LLM world.

For now the materials are available in the github repo

My colleagues at Northeastern also recorded the tutorial (though it’s maybe a bit hard to hear.)

Ben

Ben